Overview:

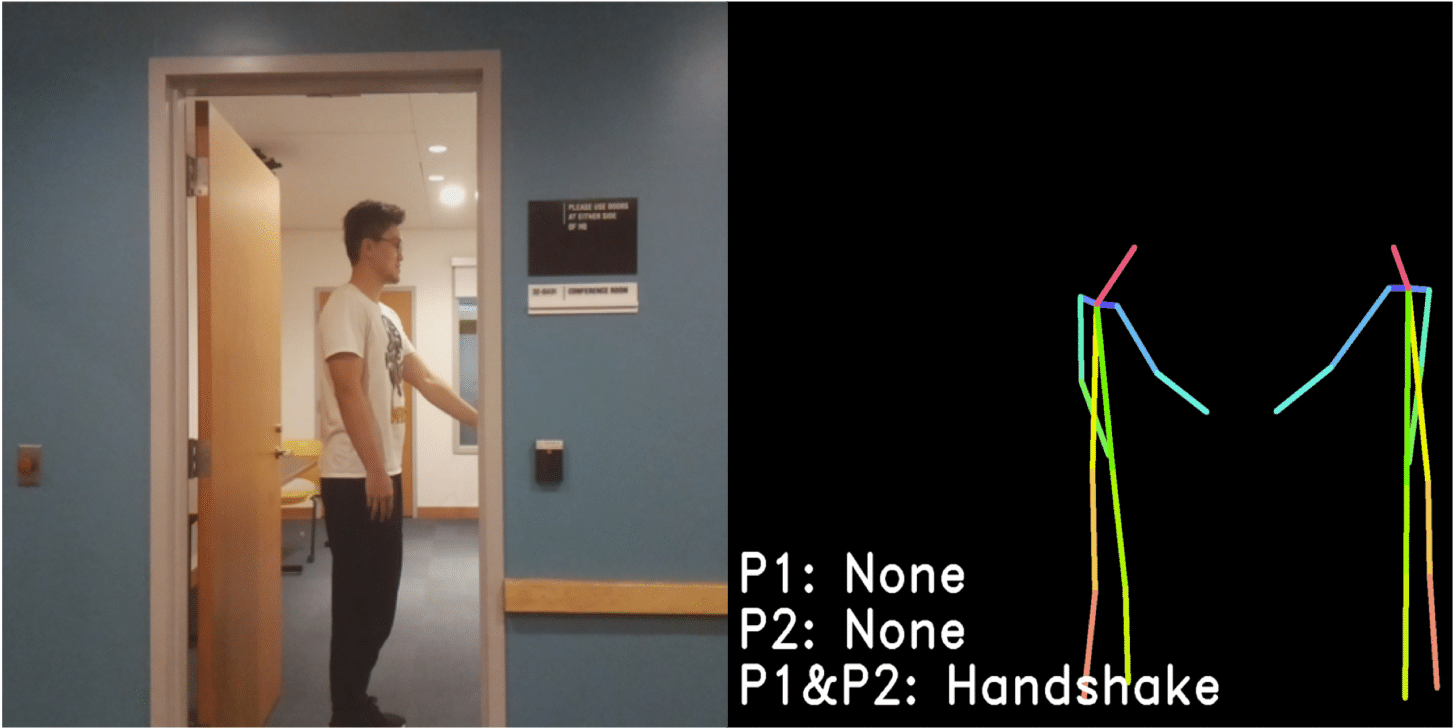

Understanding people’s actions and interactions typically depends on seeing them. But what if it is too dark, or if the person is behind a wall? We introduce a model that can detect human actions through walls and occlusions, and in poor lighting conditions. Our model takes radio frequency (RF) signals as input, generates 3D human skeletons, and recognizes actions and interactions of multiple people over time. Our model can learn from both vision-based and RF-based datasets; it achieves comparable accuracy to vision-based action recognition systems in visible scenarios, yet continues to work accurately when people are not visible, hence addressing scenarios that are beyond the limit of today’s vision-based action recognition.